Path tracing is a variation of a Monte Carlo method-based ray tracing algorithm, that finds a numerical solution to the integral of the rendering equation. If done correctly, this will result in an image that is indistinguishable from a photograph. Both the rendering equation and path tracing were presented by James Kajiya in 1986.

The rendering equation is an integral equation with basis in physics. It follows the law of conservation of energy for radiance; the radiant flux emitted, reflected, transmitted or received by a surface.

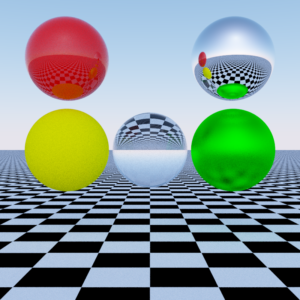

By using path tracing, the image will get a lot of effects for free. Some of them are global illumination, reflections, refractions, caustics and color bleeding. Global illumination is direct and indirect illumination. Direct illumination is when light hits a given surface directly, whereas indirect illumination is when light bounces around and finally hits a given surface. Reflections occur when light hits a reflective surface, such as a mirror. Refractions occur when light hits a refractive surface, such as glass, where it enters the surface. Caustics occur when light is concentrated. It happens when light is reflected or refracted by a curved surface. Color bleeding occurs when the color of a surface, or albedo as it is commonly referred to, can be seen on another surface. If one surface is green and another white, you may be able to see a green tint on the white surface.

Normally, when using ray tracing, you’d add lights to your scene to see anything. This is not necessary in path tracing. Any object in the scene can act as an emitter of light. If you add explicit lights, you cannot add point lights because they have no area. The probability to hit a point light would be zero.

A potentially disturbing side-effect of path tracing is noise. The more samples you use, the less noise there will be in the final image. But this takes time. There are many ways to reduce noise, such that less samples are required for approximately the same end result. One way to reduce it, is to use larger light-emitting objects, such as a sky. The probability for a ray to hit a larger object is higher than that of a smaller one.

Just as with ray tracing, there are many variations of path tracing. One is bidirectional path tracing.

This blog post will not cover the exact details for an implementation. But some pseudo code will be posted here below, so you can see the overall picture.

void render() {

Camera camera = createCamera();

World world = createWorld();

Display display = createDisplay();

Sampler sampler = createSampler();

for(int y = 0; y < display.getHeight(); y++) {

for(int x = 0; x < display.getWidth(); x++) {

Color color = Color.BLACK;

for(int sample = 0; sample < SAMPLE_COUNT; sample++) {

Sample2D sample2D = Sample2D.toExactInverseTentFilter(sampler.sample2D());

Ray ray = camera.newRay(sample2D.u + x, sample2D.v + y);

color = color.add(integrate(ray, world));

}

color = color.divide(SAMPLE_COUNT);

display.update(x, y, color);

}

}

}

Color integrate(Ray ray, World world) {

int currentDepth = 0;

Ray currentRay = ray;

Color color = Color.BLACK;

Color radiance = Color.WHITE;

while(currentDepth++ < MAXIMUM_DEPTH) {

Intersection intersection = world.intersection(currentRay);

if(intersection.isIntersecting()) {

Primitive primitive = intersection.getPrimitive();

Material material = primitive.getMaterial();

Texture textureEmission = primitive.getTextureEmission();

Color colorEmission = textureEmission.getColorAt(intersection);

color = color.add(radiance.multiply(colorEmission));

Result result = material.evaluate(radiance, currentRay);

radiance = result.getRadiance();

currentRay = result.getRay();

} else {

break;

}

}

Intersection intersection = world.intersection(currentRay);

if(!intersection.isIntersecting()) {

color = color.add(radiance.multiply(world.getBackground().radiance(currentRay)));

}

return color;

}

Using my own renderer, with code similar to the one above, I produced the following image. It took a few seconds to generate, so you may still see some noise in there. But, had I not used a sky, you’d see a lot more noise.